Results 3,731 to 3,740 of 12096

Thread: Anandtech News

-

03-14-14, 07:34 AM #3731

Anandtech: NVIDIA Announces Spring 2014 GeForce Game Bundles

Coinciding with this week’s launch of the GeForce 800M series, NVIDIA is back in the saddle with another set of bundles to help promote GeForce video card and laptop sales.

Replacing the Assassin’s Creed IV bundle that had been running for the last couple of months, NVIDIA’s spring bundle will be a two tier affair. For purchasers of the GeForce GTX 660/760 and above, NVIDIA will be including a voucher for a Steam copy of the survival horror game Daylight.

Daylight is a game we have admittedly not heard much about thus far, but a bit of research tells us that it comes from Zombie Studios, the developers responsible for the Spec Ops and Blacklight series of games, among others. More excitingly, this will be the first game released that uses Unreal Engine 4, coming out ahead of other titles such as Epic’s own Fortnite. Daylight is set to retail for $15, so this won’t be quite as aggressive a bundle that the outgoing AC4 bundle was.

Meanwhile for customers purchasing GTX 650 and GTX 750 series cards, along with “select GTX 700M/800M-based notebooks,” NVIDIA is launching another one of their free-to-play currency bundles. This spring F2P bundle will be for Warface, Heroes of Newerth, and Path of Exile, with NVIDIA providing a voucher good for $50 of currency in each game. At $150 the retail value of this bundle technically exceeds the value of the cards it’s bundled with, but it goes without saying that NVIDIA is clearly not paying retail prices here. Whereas the fact that they’re offering this bundle with laptop sales is quite interesting, since NVIDIA doesn’t typically promote laptop sales in this manner.NVIDIA Spring 2014 Game Bundles Video Card Bundle GeForce GTX

760/770/780/780Ti/Titan

660/660Ti/670/680/690Daylight GeForce GTX 650/650Ti/750/750Ti $150 Free-To-Play

(Warface, Heroes of Newerth, Path of Exile)Select GTX 700M/800M-based Notebooks $150 Free-To-Play

(Warface, Heroes of Newerth, Path of Exile)GeForce GT 640 (& Below) None

Finally, as always, these bundles are being distributed in voucher from, with retailers and etailers providing vouchers with qualifying purchases. So buyers will want to double check whether their purchase includes a voucher for either of the above deals. Checking NVIDIA’s terms and conditions, the codes from this bundle are only good through May 31st, so we don’t expect this bundle will run any longer than 2 months.

More...

-

03-17-14, 08:30 AM #3732

Anandtech: Khronos Announces OpenGL ES 3.1

Coinciding with a mobile-heavy CES 2014, back in January Khronos put out a short announcement stating that they were nearing the release of a new version of OpenGL ES. Dubbed OpenGL ES Next, we were given a high level overview of the features and a target date of 2014. As it turns out that target date was early 2014, and with GDC 2014 kicking off this week, Khronos is formally announcing the next version of OpenGL ES: OpenGL ES 3.1.

Compared to OpenGL ES 3.0, which was announced back in 2012, OpenGL ES 3.1 is a somewhat lower key announcement. Like mainline OpenGL, as OpenGL ES has continued its development it has continued to mature, and in the process the rate of advancement and need for overhauls has changed. As such OpenGL ES 3.1 is geared towards building on top of what ES 2.0 and 3.0 have already achieved, with a focus on bringing the most popular features from OpenGL 4.x down to OpenGL ES at a pace and in an order that makes the most sense for the hardware and software developers.

Version numbers aside, with OpenGL ES 3.1 Khronos has finally built out OpenGL ES to a point where it can be considered a subset of OpenGL 4.x. While OpenGL ES 3.0 was almost entirely constructed from OpenGL 3.x features, OpenGL ES 3.1’s near-exclusive borrowing of OpenGL 4.x features means that a several pieces of important functionality found in OpenGL 4.x are now available in OpenGL ES. Though at the end of the day it’s still a subset of OpenGL that’s lacking other important features.

Anyhow, as it turns out the early OpenGL ES Next announcement was surprisingly through. Khronos’s January announcement actually contained the full list of major new features for OpenGL ES 3.1, just without any descriptions to go with them. As such there aren’t any previously unannounced features here, but we do finally have a better idea of where Khronos is aiming with these features, and what kind of hardware it will take to drive them.

Compute Shaders

Compute shader functionality is without a doubt the marquee feature of OpenGL ES 3.1. Compute shader functionality was first introduced in mainline OpenGL 4.3, and at a high level is a much more flexible shader style that is further decoupled from the idiosyncrasies of graphics rendering, allowing developers to write programs that either weren’t possible using pixel shaders, or at best aren’t as efficient. Compute shaders in turn can be used for general purpose (non-graphical) programming tasks, however they’re more frequently used in conjunction with graphics tasks to more efficiently implement certain algorithms. In the gaming space ambient occlusion is a frequent example of a task that compute shaders can speed up, while in the mobile space photography is another oft-cited task that can benefit from compute shaders.

The increased flexibility is ultimately only as useful as software developers can make it, but of all of the features being released in OpenGL ES 3.1, this is the one that has the potential for the most profound increase in graphics quality on mobile devices. Furthermore compute shaders are based on GLSL ES, so they can be a fairly easy shader type for developers to adopt.

Separate Shader Objects & Shading Language Improvements

Speaking of shaders, there are also some smaller shader improvements coming in OpenGL ES 3.1. Developers can now more freely mix and match vertex shader and pixel shader programs thanks to separate shader object functionality, breaking up some of the pipelining imposed by earlier versions of OpenGL ES 3.0. Meanwhile 3.1 also introduces new bitfield and arithmetic operations, which should make desktop GPU programmers feel a bit more at home.

Indirect Draw Commands

Even with the tightly coupled nature of SoCs, SoC-class GPUs generally aren’t much better off than desktop GPUs when it comes to CPU bottlenecking. Consequently there’s performance (and quite possibly battery life) to be had by making the GPU more self-sufficient, which brings up indirect draw commands. Indirect commands allow the GPU to issue its own work rather than requiring the CPU to submit work, thereby freeing up the CPU. Khronos gives a specific example of having a GPU draw out the results of a physics simulation, though there are a number of cases where this can be used.

Enhanced Texture Functionality

Finally, OpenGL ES 3.1 will also be introducing new texture functionality into OpenGL ES. Texture multisampling is now in – a technology useful for using anti-aliasing in conjunction with off-screen rendering techniques – as is stencil textures. Texture gather support also makes an appearance, which allows sampling a texture in a 2x2 array of textures and is useful for texture filtering.

Moving on, for today’s release Khronos also laid out their plans for conformance testing for OpenGL ES 3.1. OpenGL ES 3.1 has already been finalized – this isn’t a draft specification – so the specification is done, however the conformance tests are still a work in progress. Conformance testing is largely an OEM concern, but from a consumer perspective it’s notable since it means that OEMs and chip/IP vendors technically can’t label their parts as OpenGL ES 3.1 compliant until they pass the necessary tests. Khronos tells us that conformance tests are expected to be available within 3 months, so we should see conformance validation shortly after that.

Finally on the hardware side, again not unlike mainline OpenGL, OpenGL ES 3.1 is designed to be implemented on existing hardware, with many aspects of the standard just providing a formal API to functionality that the hardware can already achieve. To that end, Khronos tells us that a number of existing SoC GPUs will be OpenGL ES 3.1 capable; so OpenGL ES 3.1 can be rolled out to the public without new hardware in those cases.

Unfortunately we don’t have a list of what GPUs will be ES 3.1 capable – the lack of conformance tests being something of a roadblock. But in Khronos’s full press release both NVIDIA and Imagination Technologies note that they expect to fully support OpenGL ES 3.1 in their Kepler and Rogue architectures respectively. No doubt we’ll hear more about this in the coming weeks and months as the other SoC GPU vendors cement their OpenGL ES 3.1 plans and pass their conformance tests.

More...

-

03-17-14, 11:30 AM #3733

Anandtech: Intel Xeon E5-2697 v2 and Xeon E5-2687W v2 Review: 12 and 8 Cores

Intel’s roadmap goes through all the power and market segments, from ultra-low-power, smartphones, tablets, notebooks, desktops, mainstream desktops, enthusiast desktops and enterprise. Enterprise differs from the rest of the market, requiring absolute stability, uptime and support should anything go wrong. High-end enterprise CPUs are therefore expensive, and because buyers are willing to pay top dollar for the best, Intel can push core counts, frequency and thus price much higher than in the consumer space. Today we look at two CPUs from this segment – the twelve core Xeon E5-2697 v2 and the eight core Xeon E5-2687W v2.

More...

-

03-18-14, 12:30 AM #3734

Anandtech: Evaluating AMD's TrueAudio and Mantle Technologies with Thief

Scheduled for release today is the 1.3/AMD patch for Thief, Square Enix’s recently released stealth action game. Following last month’s Battlefield 4 patch, Thief is the second big push for AMD’s recent Radeon technology initiative, becoming the second game to support Mantle and the first game to support TrueAudio Technology.

Thief has been something of a miss from a Metacritic perspective, but from a technology perspective it’s still a very big deal for AMD and Radeon owners. As a Mantle enabled title it’s the second game to support Mantle and the first single-player game to support it. Furthermore for AMD it showcases that they have Mantle support from more developers than just EA and other Frostbite 3 users, with Square Enix joying the fray. Finally it’s the first Unreal Engine based game to support Mantle, which can be particularly important since Unreal Engine 3 is so widely used and we expect much the same for the forthcoming Unreal Engine 4.

But more excitingly the release of this patch heralds the public release of AMD’s TrueAudio technology. Where Battlefield 4 was the launch title for Mantle Thief is the launch title for TrueAudio, being the first game to receive TrueAudio support. At the same time it also marks the start of AMD enabling TrueAudio in their drivers, and the start of their TrueAudio promotional campaign. So along with Thief AMD is also going to be distributing demos to showcase the capabilities of TrueAudio, but more on that latter.

More...

-

03-18-14, 04:30 AM #3735

Anandtech: ASRock’s AM1 Kabini Motherboards Announced, including 19V DC-In Model

With the socketed version of Kabini formally announced by AMD, motherboard manufacturers are in full swing in announcing their line up to AMD’s budget range. AMD’s goal is to get the Kabini platform to $60 for motherboard and processor, which cuts into motherboard margins and does not often leave much room for innovation. ASRock is doing something a little different on one of their motherboards which is worth a closer examination.

AM1H-ITX

First up is the AM1H-ITX, a mini-ITX motherboard that is equipped with two ways of implementing power. Users can either use a traditional power supply, or use a DC-In jack that draws power from a standard 19V laptop power adaptor:

In terms of small form factor systems, this removes the need for a bulky internal power supply (or even a pico-PSU) and changes into a console-esque arrangement, reducing the volume of the chassis needing to house the system. The motherboard itself supports two DDR3-1600 DIMM slots, a PCIe 2.0 x4 lane, a mini-PCIe slot, four SATA 6 Gbps ports (two from an ASMedia controller), a COM header, a TPM header, four USB 3.0 ports (two via ASMedia), Realtek RTL8111GR gigabit Ethernet and Realtek ALC892 audio.

AM1B-ITX

The AM1B-ITX is another mini-ITX product, this time including a parallel port, four SATA 6 Gbps (two from ASMedia), four USB 3.0 ports (two via ASMedia), Realtek RTL8111GR gigabit Ethernet and a Realtek ALC662 audio codec, putting it on a lower price point to the AM1H-ITX. There is no DC-In jack here, nor a mini-PCIe slot; however there is a PCIe 2.0 x4 slot, a COM header, a TPM header and dual DDR3 DIMM slots.

AM1B-M

The other product in the lineup is a micro-ATX offering which seems very stripped down to provide all but the basics. Alongside the two DDR3-1600 DIMM slots are two SATA 6 Gbps ports, two USB 3.0 ports on the rear IO, a VGA port, a combination PS/2 port, a Com header, Realtek Ethernet and ALC662 audio, a PCIe 2.0 x4 slot and a PCIe 2.0 x1 slot.

ASRock is expecting these models to be available from 4/9. I am awaiting information on pricing and will update when we get it.

Gallery: ASRock’s AM1 Kabini Motherboards Announced, including 19V DC-In Model (1)_thumb.jpg)

_thumb.jpg)

_thumb.jpg)

(1)_thumb.jpg)

_thumb.jpg)

_thumb.jpg)

Source: ASRock

Product Pages: AM1H-ITX, AM1B-ITX, AM1B-M

More...

-

03-18-14, 07:30 AM #3736

Anandtech: Crucial M550 Review: 128GB, 256GB, 512GB and 1TB Models Tested

In what is shaping up to be one of the most exciting months for SSDs in quite some time, we've seen Intel's new flagship SSD 730 just a couple of weeks ago and there are at least two more releases coming in the next few weeks...but today it's Crucial's turn. With their new M550 models in hand, we find out what has changed—and what hasn't—since the previous generation M500. Read on for our in-depth review.

More...

-

03-18-14, 12:30 PM #3737

Anandtech: Imagination Announces PowerVR Wizard GPU Family: Rogue Learns Ray Tracing

Taking place this week in San Francisco is 2014 Game Developers Conference. Though not necessarily a hardware show, in years past we have seen gaming-related product announcements in both the desktop space and the mobile space. This year is no exception, and in the mobile space in particular it looks to be a very busy year. As we saw on Monday, Khronos is at the show announcing OpenGL ES 3.1, with more announcements to come in the days that follow.

But it’s Imagination Technologies that may end up taking the spotlight for the most groundbreaking announcement. Never a stranger to GDC, Imagination is at GDC 2014 to announce their new family of GPU designs. Dubbed “Wizard”, Imagination is introducing a family of GPUs unlike anything we’ve seen before.

To get right to the point then, what is Wizard? In short, Wizard is Imagination’s long expected push for ray tracing in the consumer GPU space. Ray tracing itself is not new to the world of GPUs – it can be implemented somewhat inefficiently in shaders today – but with Wizard, Imagination intends to take it one step further. To that end Imagination has been working on the subject of ray tracing since 2010, including actions such as founding the OpenRL API and releasing dedicated ray tracing cards, and now they are ready to take the next step by introducing dedicated ray tracing hardware into their consumer GPU designs.

The end result of that effort is the Wizard family of GPUs. Wizard is essentially an extension of Imagination’s existing PowerVR Series6XT Rogue designs, taking the base hardware and adding the additional blocks necessary to do ray tracing. The end result being a GPU that is meant to be every bit as capable as Rogue in traditional rasterization rendering, but also possessing the ability to do ray tracing on top of that.

The Wizard Architecture

The first Wizard design, being introduced today, is the GR6500. The GR6500 is a variant of the existing Series6XT GX6450, possessing the same 128 ALU USCA and augmented with Imagination’s ray tracing hardware. From a high level overview there’s nothing new about GR6500’s Rogue-based rasterization hardware, and since we covered that in depth last month in our look at Rogue, we’ll dive right into Imagination’s new ray tracing blocks for Wizard.

The Wizard architecture’s ray tracing functionality is composed of 4 blocks. The Ray Tracing Data Master, the Ray Tracing Unit, the Scene Hierarchy Generator, and the Frame Accumulation Cache. These blocks are built along Rogue’s common bus, and in cases wired directly to the USC Array, allowing them to both push data onto the rasterization hardware and pull data off of it for further processing.

Starting with the Ray Tracing Data Master, this is the block responsible for feeding ray data back into the rasterization hardware. With the Ray Tracing Unit calculating the ray data and intersections, the data master then passes that data on to the rasterization hardware for final pixel resolution, as the ray data is consumed to calculate those values.

Meanwhile the Ray Tracing Unit (RTU) is the centerpiece of Imagination’s ray tracing hardware. A fixed function (non-programmable) block where the actual ray tracing work goes on, the RTU contains two sub-blocks: the Ray Interaction Processor, and the Coherency Engine. The Ray Interaction Processor in turn is where the ALUs responsible for ray tracing reside, while the Coherency Engine is responsible for keeping rays organized and coherent (close to each other) for efficient processing.

Notably, the RTU is a stand-alone unit. That is, it doesn’t rely on the ALUs in the USC Array to do any of its processing. All ray tracing work takes place in the RTU, leaving the USC Array to its traditional rasterization work and ultimately consuming the output of the RTU for final pixel resolution. This means that the addition of ray tracing hardware doesn’t impact theoretical rasterization performance, however in the real world it’s reasonable to expect that there will be a performance tradeoff from the RTU consuming memory bandwidth.

Finally we have the Scene Hierarchy Generator and Frame Accumulation Cache. Imagination’s description of these units was fairly limited, and we don’t know a great deal about them beyond the fact that the Scene Hierarchy Generator is used in dynamic object updates, while the Frame Accumulation Cache provides write-combining scattered access to the frame buffer. In the case of the latter this appears to be a block intended to offset the naturally incoherent nature of ray tracing, which makes efficient memory operations and caching difficult.

As we mentioned before, while the ray tracing hardware is tied into Wizard’s rasterization hardware and data buses, it fundamentally operates separately from the rasterization hardware. For GR6500 this means that at Imagination’s reference clockspeed of 600MHz it will be able to deliver up to 300 million rays per second, 24 billion node tests per second, and 100 million dynamic triangles per second. This would be alongside GR6500’s 150 GFLOPs of FP32 computational throughput in the USC Array. Of course like all PowerVR designs, this is a reference design licensed as IP to SoC designers, and as such the actual performance for a GR6500 will depend on how high the shipping clockspeed is in any given SoC.

With that in mind, while we already know a fair bit about Wizard’s design, Imagination isn’t saying a lot about some of the finer implementation details at this time. At a low level we don’t know much about how the RTU’s ALUs are organized and how they gather rays, for example. And at a high level we don’t know what the die size or power consumption cost is for implementing this ray tracing hardware, and consequently how GR6500 compares to GX6430 and GX6650. Unquestionably it’s bigger and more power hungry, we just don’t know to what degree.

Meanwhile it is worth pointing out that Imagination is targeting GR6500 in the same mobile/embedded SoC space as the Rogue GPUs, so it’s clear that the power requirements in particular can’t be too much higher than the rasterization-only Rogue hardware. With GR6500 just now being announced and the typical SoC development cycle putting shipping silicon into 2015 (or later), these are blanks that we expect we’ll be able to fill in as GR6500 gets closer to shipping.

Why Ray Tracing?

With the matter of the Wizard architecture out of the way, this brings us to our second point: why ray tracing?

The short answer is that there are certain classes of effects that can’t easily be implemented on traditional rasterization hardware. The most important of these effects fall under the umbrellas of lighting/shadows, transparencies, and reflections. All of these effects can be implemented today in some manner on traditional rasterization based processes, but in those cases those rasterization-based implementations will come with some sort of drawback, be it memory, geometry, performance, or overall accuracy.

The point then of putting ray tracing hardware in a PowerVR GPU is to offer dedicated, fixed function hardware to accelerate these processes. By pulling them out of the shaders and implementing superior ray tracing based algorithms, the quality of the effect should go up and at the same time the power/resource cost of the effect may very well go down.

The end result being that Imagination is envisioning games that implement a hybrid rendering model. Rasterization is still the fastest and most effective way to implement a number of real-time rendering steps – rasterization has been called the ultimate cheat in graphics, and over the years hardware and software developers have gotten very, very good at this cheating – so the idea is to continue to use rasterization where it makes sense, and then implementing ray tracing effects on top of scene rasterization when they are called for. The combination of the two forming a hybrid model that maintains the benefits of rasterization while including the benefits of ray tracing.

On the other hand, Imagination is also being rather explicit in that they are pursuing the hybrid model instead of a pure ray tracing model. Pure ray tracing is often used in “offline” work – rendering CGI for movies, for example – and while other vendors such as Intel have pursued real time ray tracing, this is not the direction Imagination intends to go. Their ray tracing hardware is fixed function, making it small and powerful but also introducing limitations that make it less than ideal for pure ray tracing; a solid match for the hybrid model the company is pursuing.

First Thoughts

However of all the questions Imagination’s ray tracing technology raises, the biggest one is also the simplest one: will Wizard and ray tracing be successful? From a high level overview Imagination’s ideas appear to be well thought out and otherwise solid, but of course we are hardly game developers and as such aren’t in a great position to evaluate just how practical implementing a hybrid rendering model would be. It’s a safe bet that we’re not going to see pure ray tracing for real time rendering any time soon, but what Imagination presents with their hybrid model is something different than the kinds of ray tracing solutions presented before.

From a development point of view, to the benefit of Imagination’s efforts they already have two things in their favor. The first is that they’ve lined up the support of Unity Technologies, developers of the Unity game engine, to support ray tracing. Unity 5.x will be able to use Imagination’s ray tracing technology to accelerate real time lighting previews in editor mode, thereby allowing developers to see a reasonable facsimile of their final (baked) lighting in real time. Meanwhile some game developers already implement ray tracing-like techniques for some of their effects, so some portion of developers do have a general familiarity with ray tracing already.

As for the consumer standpoint, there is a basic question over whether OEMs and end users will be interested in the technology. With Imagination being the first and only GPU vendor implementing ray tracing (so far), they need to be able to convince OEMs and end users that this technology is worthwhile, a task that’s far from easy in the hyper-competitive SoC GPU space. In the end Imagination needs to sell their customers on the idea of ray tracing, and that it’s worth the die space that would otherwise be allocated to additional rasterization hardware. And then they need to get developers to create the software to run on this hardware.

Ultimately by including dedicated ray tracing hardware in their GPUs, Imagination is taking a sizable risk. If their ideas fully pan out then the reward is the first mover advantage, which would cement their place in the SoC GPU space and give them significant leverage as other GPU vendors would seek to implement similar ray tracing hardware. The converse would be that Imagination’s ray tracing efforts fizzle, and while they have their equally rasterization capable Rogue designs to rely on, it means at a minimum they would have wasted their time on a technology that went nowhere.

With that in mind, all we can say is that it will be extremely interesting to see where this goes. Though ray tracing is not new in the world of GPUs, the inclusion of dedicated ray tracing hardware is absolutely groundbreaking. We can’t even begin to predict what this might lead to, but with SoC GPUs usually being an echo of desktop GPUs, there’s no denying that we’re seeing something new and unprecedented with Wizard. And just maybe, something that’s the start of a bigger shift in real time graphics.

Finally, today’s announcement brings to mind an interesting statement made by John Carmack a bit over a year ago when he was discussing ray tracing hardware. At the time he wrote “I am 90% sure that the eventual path to integration of ray tracing hardware into consumer devices will be as minor tweaks to the existing GPU microarchitectures.” As is often the case with John Carmack, it looks like he may just be right after all.

More...

-

03-19-14, 01:01 AM #3738

Anandtech: Android Wear, Moto 360, and LG G Watch: Initial Thoughts

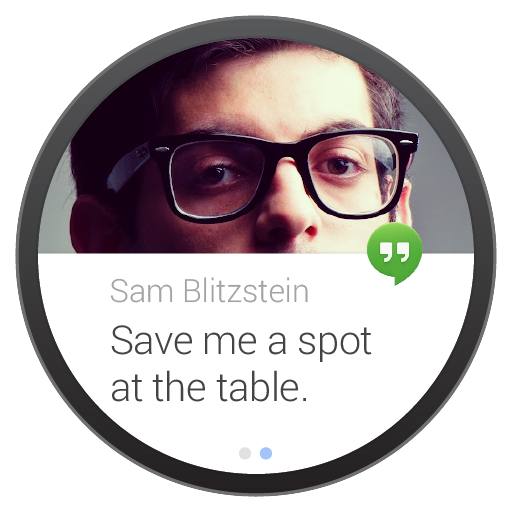

Google has finally announced its entrance into the smartwatch segment with the platform Android Wear. Much like how Android is a broadly adopted platform by many smartphone OEMs, Google hopes to have the same level of adoption with Android Wear. At its most basic level, Android Wear will be centered upon Google Now, which is a way of presenting predictive information in a card format. It will also serve as a touchless control device like the Moto X for voice searches, along with notifications and the abAndrility to act on specific notifications without taking out a phone, such as dictating a text message. If this sounds somewhat familiar, that’s definitely not unusual, because that’s exactly what Google Glass is for. An example of the notification system and the touchless controls can be seen below.

Android Wear isn't just borrowing the Android brand name either, it seems that Android Wear is actually based on Android proper. The big part that Google hopes developers will use is the Wear SDK, which allows for custom UIs, sensor data collection, communication between wearable and phone, and hooking into the touchless control feature.

What is different is the social factor. While Glass is extremely obvious and relatively unacceptable to society due to potential privacy implications, an Android Wear smartwatch wouldn’t have such issues because a camera or video on a watch would be quite conspicuous. While this makes it much easier to see mass-market adoption of smartwatches, the lack of heads-up display functionality removes many of the killer applications that Glass had, such as the potential for live video streaming and navigation without taking ones’ eyes off the road. It’s hard to say whether this would mean that the case for a smartwatch is tough to swallow, but in the interim, such a formfactor is ideal.

The elephant in the room is why Google is doing this. Ultimately, no one has really figured out how to best implement the smartwatch, and when the vast majority of people seem to expect budget prices on these accessories, it speaks to the perceived utility and value of such accessories. Despite this, Google is pushing on, and I’m convinced that this is the product of a burning desire to maintain the exponential growth that the smartphone market once had, as products like the Moto G are signaling a new era of dramatically depressed profit margins, greatly lengthened upgrade cycles, and OEM consolidation. This mirroring of the PC industry is likely the strongest reason why Google and its hardware partners are now moving to wearables. The other key reason is that Google wants to have the first-mover advantage in case wearables take off, a clear case of learning from past experiences in the smartphone space. The fact that it's now physically possible to have sufficient compute for a usable smartwatch explains the timing as well.

Of course, it’s worth looking at the sorts of designs already announced. The first one that we knew of was announced back in MWC at the Motorola press conference, but now we’ve seen the first images of the actual watch, dubbed the Moto 360, and it’s clear that Motorola understands that the most important part of the watch component has to be industrial design/material feel. While the circular display is undoubtedly more expensive, it’s definitely important as it helps to make the watch actually feel like a watch.

The more traditional smartwatch would be the LG G Watch, which is said to be aimed at creating a low barrier to entry for developing on the Android Wear platform, which parallels the Nexus lineup for Android smartphones and tablets. Neither the LG G Watch nor the Moto 360 have any specific details for the display, SoC, RAM, or any other specifications, but it’s not a stretch to say that these would be running low power CPU cores with 512MB of RAM or less, with display resolutions solidly below VGA (640x480), based upon the size of the display that can be reasonably fitted on a watch for normal wrists.

Ultimately, it’s hard to say whether this will be an enduring trend or a passing fad. I’m reminded of early smartphones, which were often not very well polished as most OEMs had not yet figured out the right formula for solid usability. After all, a watch has generally been for fashion, while phones have always been tools first.

More...

-

03-19-14, 05:30 AM #3739

Anandtech: LEPA Releases 1700W MaxPlatinum Power Supply, EU Only

In the major PC component spaces, there comes a time when a product stack fits nicely with what needs to be done. In the GPU area, we have had discrete and onboard graphics that run normal web computing for 85% of regular users as well as it needs to. But the boundaries are pushed at the upper limit, where resolution and pixel power matter most. With power supplies, it is kind of the same thing – with most desktop computers using sub-400W at peak, there is no real need for these users to spend money on 1000W power supplies. But for the extreme enthusiast end, this need exists. For these users, LEPA has released their 1700W MaxPlatinum power supply as part of their CeBIT 2014 launch.

The MaxPlatinum range features 1050W, 1375W and 1700W models, all 80 PLUS Platinum certified (on 115V, >90% efficient at 20% load, >92% @ 50% load, >89% @ 100% load). The designs are modular, the 1700W unit measures 180x150x86mm, all units support C6/C7 power states for Haswell and up to 10 PCIe 6+2 power connectors depending on the model. The device uses a multi-rail design, with two of the rails at 20A and four rails at 30A. The base design is performed by Enermax, and uses a 135mm ball bearing fan with thermal speed control. The official specification sheet, along with cable lengths, can be found here.

The title for this news piece includes the phrase ‘EU Only’. This applies to the 1700W model only, which appropriately has the SKU code P1700-MA-EU. The other models will be available in other regions, but having an EU centric model is a little confusing. This is presumably due to efficiency using a 220-240V input – these regions tend to have higher efficiency ratings, and I wonder if LEPA was not able to hit 80 PLUS Platinum without this input. That would suggest the possibility of an 80 PLUS Gold or Silver 1700W edition might be on the cards.

Addendum: Enermax (who make the unit) has told me that to make a 1700W Platinum model for 110/115V regions requires a different design. At the minute this is not planned, with the G1600 model being the focus for North America.

So the question at the end of the day still is ‘What do you need 1700W for?’ Back when I tested an EVGA SR-2 with dual Westmere-EX Xeons and quad ASUS HD 7970 GPUs, I used a 1600W power supply that was hitting 1550W when the system was overclocked. Or take for example Cryptocurrency mining, whereby five R9 290X GPUs are being powered by a single power supply, also potentially overclocked. There is also a wealth of compute possibilities to be considered. Regular users need not apply.

Gallery: LEPA Releases 1700W MaxPlatinum Power Supply, EU Only

More...

-

03-19-14, 08:30 AM #3740

Anandtech: Khronos Announces WebCL 1.0, SYCL 1.2, and EGL 1.5 Specifications

Following Monday’s announcement of OpenGL ES 3.1, Khronos is back again with a slate of new standards announcements. Unlike Monday’s focus on OpenGL ES, the bulk of these announcements fall on the compute side of the spectrum, which for Khronos and its members is still a new and somewhat unpredictable market to participate in.

WebCL 1.0

The headliner for today’s specification releases is WebCL, Khronos’s standard for allowing OpenCL within a web browser. Khronos has been working on the standard for nearly 2 years in draft form, and as of today the final specification is being released.

With WebCL Khronos and its members are looking to do for OpenCL what WebGL has done for OpenGL, which is to make a suitable subset of these APIs available in browsers. With heavy “web application” style websites continuing to grow in popularity, the idea is to expose these APIs to programs running in a web browser, to reap the many of the same benefits that native programs would have from using the full-fledged version of their respective API. WebGL has already seen some success in offering hardware accelerated 2D/3D graphics within browsers, and now Khronos has turned their eye towards high performance heterogeneous computing with WebCL.

Fundamentally, WebCL is based on the OpenCL 1.1 Embedded Profile. OpenCL 1.1 EB is a scaled down version of OpenCL that was originally designed to be a better fit for embedded and other non-desktop devices, offering a relaxed set of OpenCL 1.1 features that are better matched to the more limited capabilities of hardware in this class. This means that devices and web browsers that implement just the baseline WebCL specification won’t have access to the full capabilities of OpenCL, but many of the differences come down to just floating point precision and memory access modes. Not surprisingly then this makes WebCL a lot like WebGL: WebGL is based on OpenGL ES, Khronos’s OpenGL standard for handheld/embedded devices, and now WebCL is based on Khronos’s OpenCL standard for handheld/embedded devices.

Ultimately with WebCL Khronos is looking at solving the same general issues that led to OpenCL in the first place: the need for high performance computing, chiefly on non-CPU devices. On the consumer desktop OpenCL hasn’t been a massive success so far but it does have its niches, and those niches are expected to be similar for web based applications. This would mean image manipulation would be a strong use case for WebCL, similar to how we see OpenCL and OpenGL used together on the desktop, the API reflecting which style of programming is faster for the algorithm at hand. Though as with WebCL, as an open ended compute programming environment, it’s up to programmers to figure out how to best fit it in to their workflows.

In the meantime however, Khronos and its members have first needed to address the potential security implications of WebCL, which is part of the reason it has been in development for so long. The release of WebGL brought to light the fact that GPU drivers weren’t fully hardened against exploitation, due to the fact that until WebGL there was an implicit assumption that all code was trusted before it was being run in the first place, an assumption that is not true for web environments. WebCL in turn has amplified these concerns due to the fact that it is a far more flexible and powerful environment, and conceivably would be easier to exploit.

The end result is that a number of steps have been taken to secure WebCL against exploits. Chief among these steps, a collection of inherently risky OpenCL features have been dropped, particularly pointers (pointers can be used safely, but often are not). At the same time the WebCL environment itself will undertake its own exploit mitigation efforts; the runtime dynamically checks for exploit behavior, and Khronos is providing a validation tool for developers to do static kernel analysis to identify potential security problems ahead of time. Finally, driver vendors have played their own part in locking down WebCL (being the final failsafe), hardening their drivers against attacks and implementing better context management to ensure that contexts stay separated (the WebCL equivalent of preventing a XSS attack). Ultimately we can only wait and see how well WebCL is going to be able to resist attacks, but it’s clear that Khronos and its members have put a lot of thought and effort into the matter.

SYCL 1.2

Khronos’s second announcement of the day is SYCL 1.2 SYCL (pronounced like sickle) is being released as a provisional specification, and is designed to provide an abstraction layer for implementing C++ on OpenCL. SYCL in turn builds off of SPIR, the standard portable intermediate representation format for OpenCL.

With SYCL, Khronos is looking to solve one of the greatest programmer demands of OpenCL, which is to enable OpenCL programming in C++. OpenCL itself is based on C, and while the languages have similarities, at the end of the day C is functionally a lower level language than C++, both a blessing and a curse in the case of OpenCL. One of the reasons for the success of competing platforms such as CUDA has been their better support for high level languages like C++, so SYCL is Khronos’s attempt to push ahead in that space. And unlike platforms like CUDA, the wider array of hardware OpenCL supports means that SYCL will be focusing on a few features that don’t necessarily exist on alternative platforms, such as single source C++ programming for OpenCL.

The consumer impact of SYCL is going to be minimal (at least at first), but given SYCL’s intended audience it’s expected to be a very big deal for developers. AMD in particular has a very vested interest in this, as OpenCL is one of the chief platforms intended to expose their Heterogeneous System Architecture; so having C++ available to OpenCL in turn makes it easier to use C++ to access HSA. Though similar principles apply to any program that wants to use C++ to access GPUs and other processors through OpenCL.

Finally, for the moment SYCL is starting out as a provisional specification. Despite the 1.2 version number, this is the first release of SYCL, with the version number indicating which version of OpenCL it’s being designed against – in this case OpenCL 1.2 Future versions of SYCL will target OpenCL 2.0, which should prove to be interesting given OpenCL’s virtual memory and dynamic parallelism improvements. Though the multi-layered approach of this setup – SYCL is built on SPIR is built upon OpenCL – means that SYCL itself will always trail OpenCL to some extent. Even SPIR 1.2 isn’t finalized yet, to give you an idea of where the various standards stand.

EGL 1.5

Khronos’s final announcement of the day is that the EGL specification is now up to 1.5. EGL is not a standard we hear much about, as it’s primarily used by operating systems rather than applications. To that end, EGL in a nutshell is Khronos’s standard for interfacing their other standards (OpenGL, OpenCL, etc) to the native platform windowing system of an OS.

EGL is used rather transparently in a number of operating systems, the most significant of which for the purposes of today’s announcement is Android. Significant portions of the Android rendering system use EGL, which mean that certain aspects of Android’s development track EGL and vice-versa. EGL 1.5 in turn is introducing some new features and changes to keep up with Android, chief among these being enhanced support for 64bit platforms (to coincide with the 64bit Android transition). Also a highlight in EGL 1.5 is the addition of support for sRGB color rendering, which will make it easier for OS and application developers to properly manipulate images in the sRGB color space, improving color accuracy in a class of products that until recently haven’t been concerned with such accuracy.

Finally, EGL 1.5 also introduces some changes to better support WebGL and OpenCL. These are fairly low level changes, but we’re looking at interoperability improvements to better allow OpenGL ES and OpenCL to work together when EGL is in use, and some new restrictions on graphics context creation to better harden WebGL against attacks.

More...

Thread Information

Users Browsing this Thread

There are currently 12 users browsing this thread. (0 members and 12 guests)

Quote

Quote

Bookmarks